The IceCube Neutrino Observatory recently took center stage at Supercomputing 2019—the largest high-performance computing conference in the world.

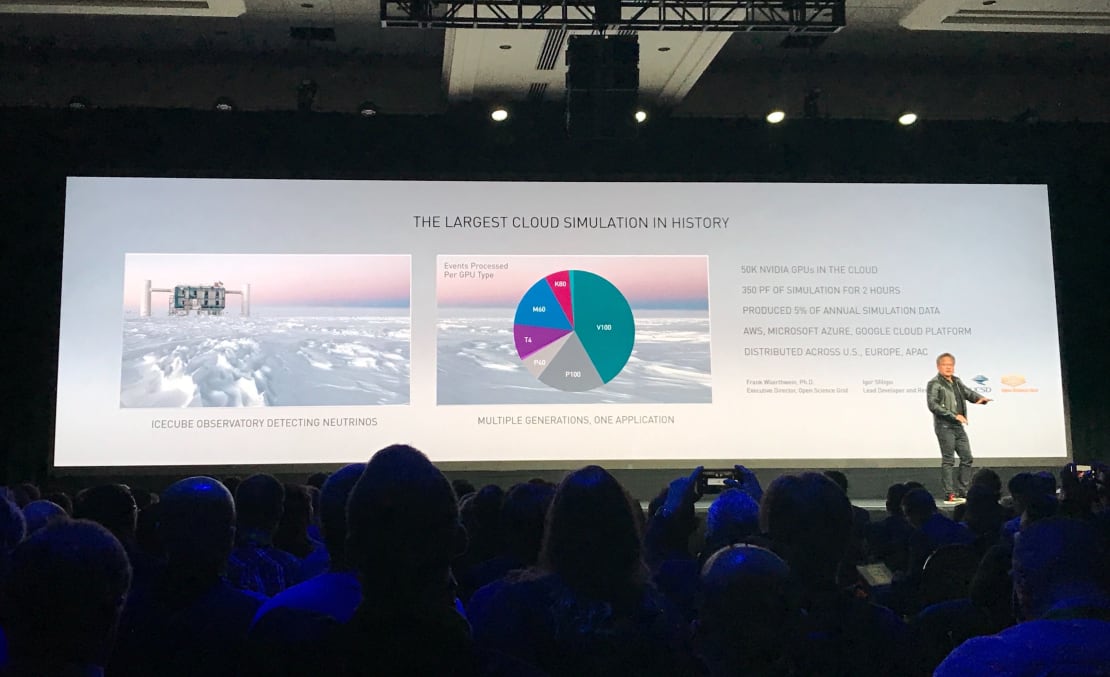

The evening of Monday, November 18, 2019, NVIDIA CEO, Jensen Huang, gave a keynote address highlighting science projects using NVIDIA hardware and forthcoming products that focus on scientific innovation, in particular those using high-performance computing. One of these projects was a recent NSF-funded experiment that was a collaboration between the San Diego Supercomputing Center and the Wisconsin IceCube Particle Astrophysics Center (WIPAC) in which all available GPU (graphics processing units) resources from three cloud providers (Amazon Web Services, Google Cloud Platform, and Microsoft Azure) were combined into a global resource pool to simulate detector response to better understand data collected by the IceCube Neutrino Observatory. In all, some 51,500 GPU processors were used during the approximately two-hour experiment conducted on Saturday, November 16.

The goal of the GPU cloudburst experiment was threefold: determine whether researchers were able to create a pool that would mimic future exascale-class computing resources, test whether researchers were ready to utilize these resources, and produce meaningful output during this experiment. All of these goals were achieved. It showed that IceCube is ready to use future exascale-class resources for its computing needs. Using this resource pool, IceCube produced Monte Carlo simulations, which are used to better filter signal from noise and allow for improved reconstruction techniques.

The experiment also involved the University of Wisconsin–Madison’s Center for High Throughput Computing, the Open Science Grid, the Pacific Research Platform, and Internet2. IceCube collaborators Benedikt Riedel and David Schultz, both from WIPAC, were instrumental in running the demo.

To learn more about this experiment, read the press release on the WIPAC website. Watch the full keynote here (Huang begins talking about IceCube at 01:06:52).