While ubiquitous atmospheric neutrinos are usually considered as background in many IceCube analyses, they allow for precision measurements of the neutrino properties that were once the realm of large particle physics experiments like the ones at CERN and Fermilab or of neutrino detectors devoted to studying neutrino oscillations with the best accuracy.

With better and larger neutrino telescopes in the horizon, researchers are now designing more efficient analysis techniques that will boost our understanding of neutrinos and advance searches for new physics including additional neutrino flavors or new interactions. These techniques not only provide more accurate and robust results, but also reduce expenses and time in computation that could limit improvements in the design of new detectors or the discovery potential of existing facilities. Details of these new techniques are given in a paper by the IceCube-Gen2 Collaboration submitted this week to Computer Physics Communications.

When trying to understand neutrino interactions in or around IceCube, scientists compare what we know with what nature is telling us, i.e., what our theories predict with what our detector measures. As it happens in competitive sports, where the transition from great performance to perfection and victory requires more advanced techniques, moving from the first great measurements to the best measurements ever drives the design of new state-of-the-art analysis.

Neutrinos have been shown to be extremely interesting particles that could potentially unlock evidence for predicted—and maybe also unpredicted—new physics. The fact that neutrinos oscillate, or morph from one type to another as they travel through space and matter, was the first observation of a quantum effect at large scales. And years after this discovery, physicists are still trying to figure out what these oscillations can tell us about matter and whether these fleeting neutrinos can also reveal the properties of new physics such the nature of dark matter or the existence of still unknown laws of physics.

Nature provides huge numbers of neutrinos and, thanks to its denser infill array DeepCore, IceCube can now perform very precise measurements of the neutrinos interacting near or in the detector. Future extensions of IceCube, known as IceCube-Gen2, as well as other neutrino telescopes in water will push these measurements to the next level of accuracy.

The uncertainty about the workings of nature propagates into a set of different parameters and models in our theories. To prove which one is correct or at least rule out those that are not correct, scientists need to produce simulations at the level of precision at which the effects of these theories can be measured. Thus, if nature is providing us with tens of thousands of neutrinos, even millions of them, we need to produce large samples of simulated events to be tuned to the specifics of every single and relevant theory. In addition, we need to mimic how these theoretical predictions are changed by the design and performance of our detector.

For an experiment like IceCube, and for other very large neutrino detectors to come, these simulations require an outlandish amount of computing resources. The larger the detector and the more accurate the measurement, the more critical it is what computers can do in a reasonable amount of time, the point that a lack of simulations could delay or even prevent an analysis, thus maybe a discovery.

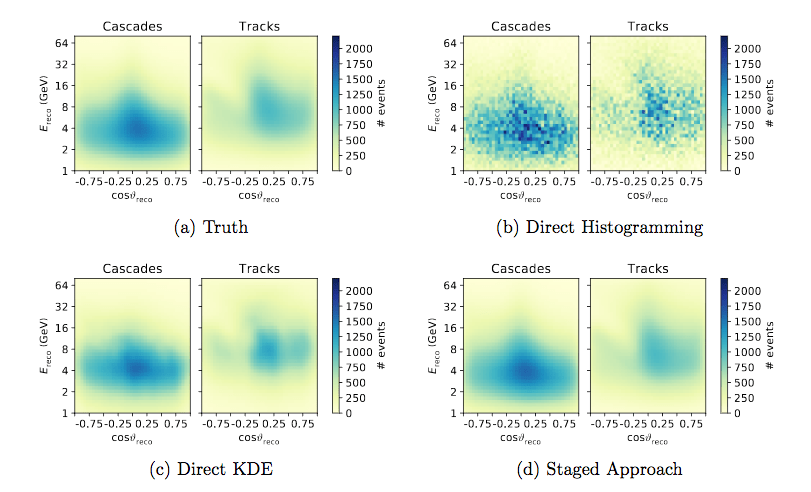

The new techniques that IceCube researchers present in the paper now on arXiv reduce the amount of simulation computation by dividing the production into four phases or stages: i) theoretical predictions of nonoscillated neutrinos; ii) adding oscillation effects, which change the flavor content of the sample; iii) integrating the effects of the detector, i.e., taking into account the probability that a given neutrino interacts in or near the detector and is later selected as an interesting event for a specific analysis; and iv) reconstruction, i.e., the transformation of raw data into the physical properties of the events.

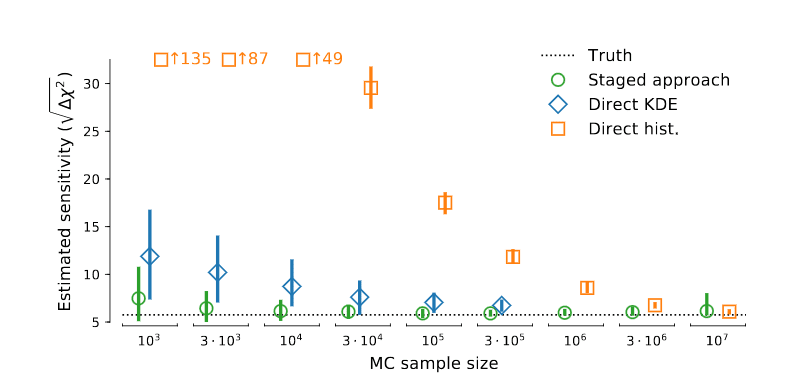

The trick is in calculating and applying the physics and detector effects not on each individual event, but on groups of events that have similar enough properties. To group the events, a dense but finite grid is used, which can be optimized depending on, for example, the symmetries of the detector or the specific properties we want to measure. They also use improved interpolation and smoothening techniques, which allow to go from one stage to the next one by applying a transformation to the output of the previous stage. More standard techniques rely on calculating a weight for every individual simulated event and for every scenario—set of parameters—considered.

“These techniques have been vital for studying and optimizing the performance of the upcoming IceCube low-energy upgrade program. Without them, we’d probably still be computing for the next couple of years,” explains Sebastian Böser, an IceCube researcher at the University of Mainz and one of the lead authors of this work.

The authors used this new technique in studies to measure the sensitivity to the neutrino mass ordering in neutrino oscillations for the planned lower energy extension of IceCube. These studies yield more accurate and robust results with a reduction of two orders of magnitude in the amount of simulations produced.

“We hope this paper will help in the understanding of how IceCube upgrade studies are performed, and that the described methods could be of use to other people in the community,” says Philipp Eller, a postdoctoral researcher at Penn State University and also a lead author of this paper.

+ info “Computational Techniques for the Analysis of Small Signals in High-Statistics Neutrino Oscillation Experiments,” IceCube-Gen2 Collaboration: M. G. Aartsen et al., Nucl. Instrum. Meth. A 977 (2020) 164332 , sciencedirect.com, arxiv.org/abs/1803.05390